56% Infrastructure Optimization and 43% Cost Reduction Achieved Through AI/ML-Powered Analytics for a Fortune 200 Sportswear Manufacturer

Business Need

- Data Classification for Strategic Utilization: A way to understand the types and distribution of data within the Isilon system. This classification was crucial for maximizing data utilization and optimizing storage efficiency.

- Streamlined Storage & Cost Reduction: Migrating from the existing legacy infrastructure to the more advanced NetApp system, ensuring a seamless transition and cost-effectiveness.

- Enhanced Data Accessibility and Workflow Efficiency: Speeding up data access and retrieval was paramount. They needed a meticulous data migration process to the high-performance NetApp infrastructure to achieve optimal workflow efficiency.

- Tackling Dark Data – A Multifaceted Approach: A robust strategy to identify and manage “dark data” – inactive and potentially risky data – was critical. This strategy needed to address various aspects:

- Security and Compliance: Mitigating security threats and ensuring adherence to data regulations.

- Privacy and Operational Efficiency: Safeguarding data privacy while optimizing operational processes.

- Storage Cost Reduction and Reputation Management: Minimizing storage expenses associated with dark data and preserving brand reputation by avoiding data-related breaches.

- Data Quality Improvement and Business Insights: Enhancing data quality while unlocking valuable business insights hidden within the data.

Challenges Faced

- Limited Data Visibility: A lack of understanding of the data types stored within the Isilon system created a significant challenge. This “data knowledge deficiency” prevented them from making informed decisions about data utilization, leading to:

- Security Vulnerabilities: Unidentified sensitive data within the system increased the risk of security breaches.

- Compliance Risks: The potential to violate data privacy regulations due to a lack of knowledge about the data they held.

- Suboptimal Storage Utilization: Inefficient allocation of storage resources due to a lack of data classification.

- The Dark Data Dilemma: The accumulation of inactive data over a two-year period represented a growing burden on their storage systems. This “dark data” amplified security risks and wasted valuable storage resources.

- Escalating Storage Costs: Inefficient storage practices led to rising storage expenses, putting a strain on the company’s budget.

- Inefficient Storage Infrastructure: The existing infrastructure was outdated and required high maintenance costs, further hindering their ability to manage their data effectively and cost-consciously.

Solution Offered

AI-Driven Data Intelligence

Unveiling Hidden Dark Data

Data-Driven Storage Optimization

Following the comprehensive data analysis, UDM provided tailored storage optimization recommendations. These included:

-

- Archiving Cold Data: The software suggested archiving cold data to a cost-effective cloud storage solution like Azure Cool Blob, freeing up valuable storage space on the primary infrastructure.

- Purging After Verification: For certain categories of inactive data, the software recommended secure purging after a thorough verification process to ensure no critical information was lost.

Business Impact

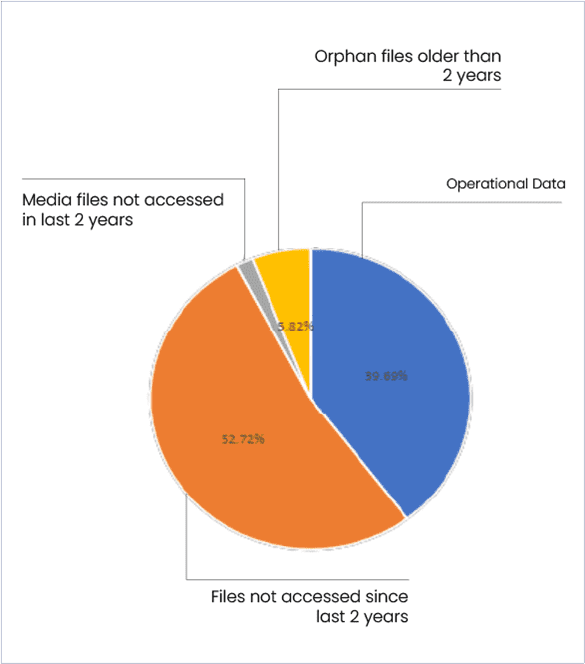

Risk Management: The analysis uncovered 3.17 TB of inactive media files and 10.89 TB of orphaned data, both of which had been untouched for two years. These findings led to strategic recommendations for tiering to object storage or archival, with the potential for secure deletion after verification. Additionally, 130 GB of PST files, untouched for a year, were earmarked for archival or deletion, further optimizing storage usage.

Comprehensive Data Optimization: The UDM software’s comprehensive analysis revealed that approximately 60.31% of the entire dataset was suitable for archiving. This data-driven approach to storage management resulted in a projected cost reduction of 43%, directly impacting the company’s bottom line.

Cost Reduction: The UDM software analyzed 187 TB of data and identified 98.59 TB (approximately 52.7%) as untouched for two years, making it ideal for archiving. By moving this data to more cost-effective storage solutions, the company unlocked substantial storage efficiency, reducing the strain on their primary infrastructure.