Artificial Intelligence’s evolution is nothing short of remarkable. Large Language Models (LLMs) and Specialized Language Models (SLMs) have ushered in an era where machines aren’t just tools—they’re collaborators, diagnosing medical issues, advising on financial decisions, and analyzing complex information. But there’s a side to this story that doesn’t make the headlines: these Generative AI models are built on vast oceans of unstructured data, which, while giving high performance and are powerful, also carries a unique set of risks.

The Underlying Challenges in Unstructured Data Dependency

Imagine an architect designing a skyscraper without blueprints. That’s what we’re asking AI to do when it relies heavily on unstructured, chaotic data. Unstructured data set—email address, social media posts, medical records, transaction logs, financial information—is as messy as it sounds—is inherently messy, inconsistent, and riddled with redundant, outdated, and trivial (ROT) data. For models like ChatGPT and Google Bard, which rely heavily on such data to “learn” about our world, this lack of structure breeds noise, inaccuracies, and even unwanted biases. In fact, it’s estimated that up to 80% of all data today is unstructured, and much of it feeds the LLMs and SLMs shaping our digital interactions. As AI modeling leans heavily on LLMs and SLMs, unstructured data presents unique and pressing challenges, particularly concerning data quality and privacy risks.

The result? An unstable foundation for AI model reliability and safety.

Think of healthcare, where data such as patient notes, imaging reports, and medical diagnoses become the core inputs for AI models. A small inconsistency, like “no symptoms” versus “no notable symptoms,” can sway an AI’s interpretation, leading to skewed outputs—something that could have real-world consequences on patient care. Gartner estimates that poor data quality costs companies approximately $12.9 million annually—an expense that only escalates in high-stakes industries like healthcare.

Finance faces similar challenges. Take Bank of America, where unstructured data like emails and chat logs inform AI models designed to detect fraud and guide clients on insurance, investments, and retirement. Roughly 60% of its clients rely on these AI-driven insights, so accuracy is everything. We are sure they are doing it right but not its still a mammoth task to manage. When data is messy, legitimate transactions might get flagged as fraud, or actual fraud could slip through the cracks. It’s a reminder that with the incredible power of AI comes the need for relentless attention to data quality.

Moreover, the privacy implications of unstructured data present an equally formidable hurdle. Consumer sensitive information and confidential data—social security numbers, credit card details, health records— Personally Identifiable Information (PII) and Protected Health Information (PHI) often remains embedded within unstructured data.

Without meticulous data discovery, classification & tagging, backed by stringent privacy protocols, there’s a real risk of AI models unintentionally exposing private data leading to data leakage and risks associated with data security. History has shown that models can “memorize” and inadvertently disclose sensitive information, triggering risk of data regulatory scrutiny. In today’s environment, with frameworks like GDPR and CCPA, any breach not only incurs steep fines but also erodes public trust, which is increasingly difficult to rebuild in the AI-driven landscape.

A New Path Forward: Embracing Decentralized, Self-Service Data Management

Facing these challenges means rethinking how we handle data.

Confronting the issues around unstructured data in AI requires a fundamental shift from traditional, centralized data management to a more dynamic and decentralized model. Imagine a data environment where refinement happens at the source—where data is tagged, filtered, and organized by those who best understand its nuances. This decentralized, self-service data management approach empowers data and application owners across departments to take responsibility for data quality, security, privacy, compliance & optimization, ensuring that only the most accurate, relevant information flows into AI systems.

Instead of funnelling vast amounts of raw, unstructured data into a centralized repository, decentralized teams can process and prepare data locally. This refinement reduces data noise and enhances relevance, allowing AI models to draw insights from well-organized, secured, high-quality data, ultimately leading to more accurate and ethically sound outcomes.

Retail giants like Walmart illustrate the power of decentralized data management. By giving each store the autonomy to preprocess and categorize its own sales data, Walmart ensures that only insights tailored to local trends make it to the central AI. This method not only minimizes irrelevant data but also strengthens data relevance, providing a clearer view of patterns and enabling more accurate, context-specific analysis.

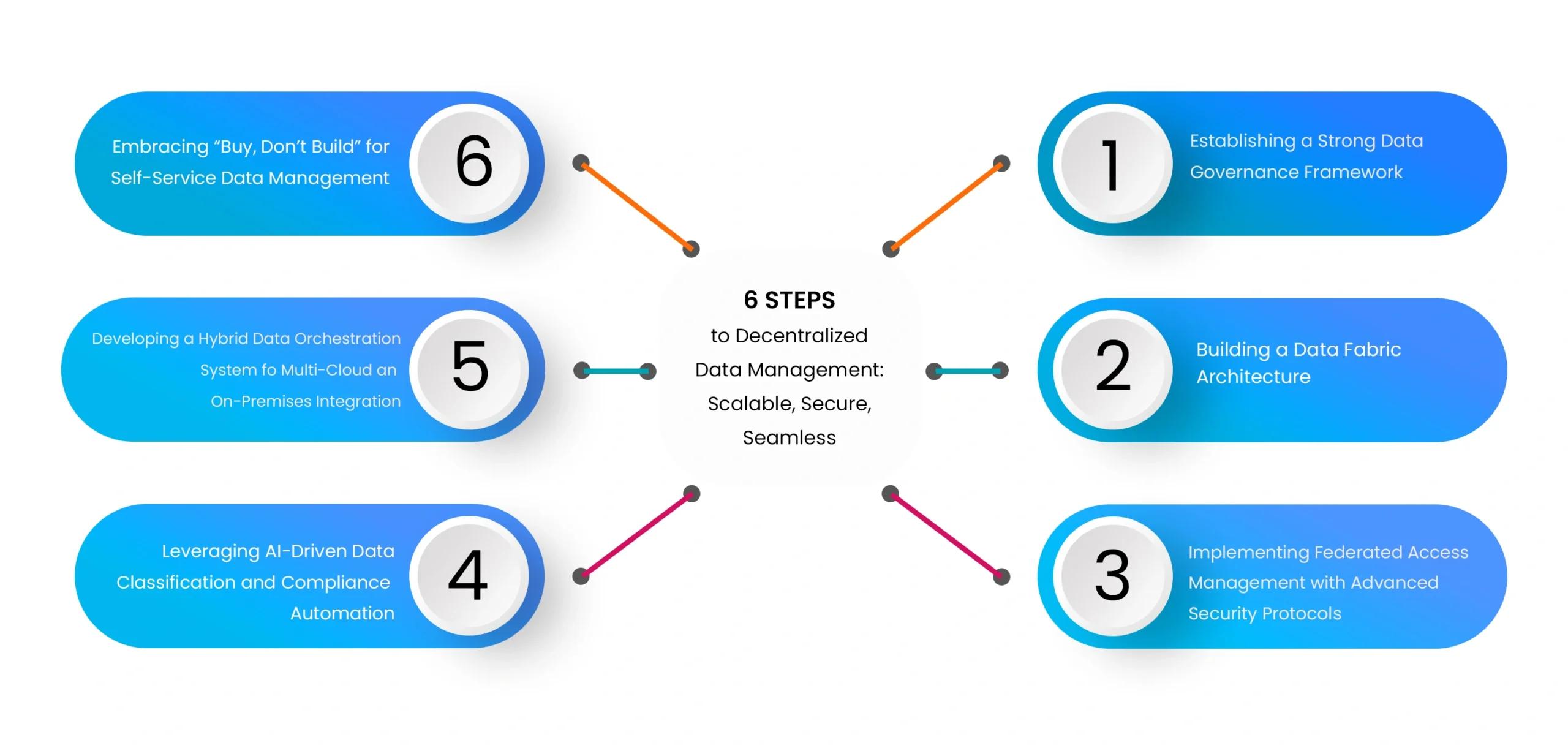

6 Steps to Decentralized Data Management: Scalable, Secure, Seamless

Implementing decentralized data management might sound complex, but it’s a strategy that brings massive value if done right. Let’s break it down step by step.

1. Establishing a Strong Data Governance Framework

First things first, you need a solid governance framework. Think of this as the backbone of your decentralized data setup. It’s about setting clear policies, standards, and best practices across all your teams so everyone knows the rules of the game. You want every department or team to understand exactly what’s expected when it comes to managing data. We’re talking about tools like data cataloging and role-based access control that make it easy for people to find and use data without compromising security. And automation? Absolutely. With automated auditing and monitoring in place, you’ll know exactly who accessed what data, when, and how. This setup ensures that every data action is trackable, which not only brings transparency but also keeps you compliant across your entire data network.

2. Building a Data Fabric Architecture

Now, let’s talk about the data fabric. Imagine a system that connects all your data sources, creating a single, unified layer for data access and control. You’re no longer moving data around physically from one place to another. Instead, the data fabric lets you access data in real-time, no matter where it’s stored—be it on-prem, in the cloud, or even a hybrid of both. This fabric is agile; it supports dynamic data virtualization, meaning you access data through logical views without creating redundant copies. That way, you get the speed and efficiency of real-time access without the hassle. Plus, with a strong metadata management layer, you get full visibility into where your data’s coming from and where it’s going. It’s like a map of all your data, making cross-functional insights simpler and faster to achieve.

3. Implementing Federated Access Management with Advanced Security Protocols

Security is a big piece of this puzzle, and in a decentralized environment, you need a system that can handle multiple layers of access control without slowing you down. Enter federated access management. This setup allows users to access data from various systems using a single set of credentials, keeping things smooth and secure. Think about it as a security umbrella that covers multiple environments, all while protecting data integrity. Adding multi-factor authentication and adaptive access control adds that extra layer of security, adapting access levels based on user behavior and risk. And since everything’s centralized, you get to monitor authentication patterns across the board. So if there’s any unusual activity, you’ll catch it early. This is transparency and control rolled into one, perfect for staying compliant with data protection regulations.

4. Leveraging AI-Driven Data Classification and Compliance Automation

Now, here’s where AI can really shine in decentralized data management. Instead of manually classifying and checking data for compliance (which takes ages), AI-driven tools handle it all. Advanced machine learning models can automatically categorize data based on sensitivity, compliance needs, and business importance. It’s like having a virtual data steward that’s constantly on duty. These AI tools also continuously check for compliance, meaning they’re always scanning your data against regulatory standards like GDPR, CCPA, and HIPAA. They’ll flag any data that doesn’t meet compliance requirements, allowing your team to focus on higher-level governance tasks rather than the nitty-gritty. This is automation working at its best, reducing errors, saving time, and keeping data secure and compliant across all departments.

5. Developing a Hybrid Data Orchestration System for Multi-Cloud and On-Premises Integration

Finally, hybrid data orchestration is the linchpin that keeps everything running smoothly across on-premises and multi-cloud setups. With a hybrid data orchestration system, your data moves seamlessly between environments, with real-time updates mirrored across all storage locations. This is key to avoiding those dreaded data silos. Plus, with workload balancing, you can distribute processing tasks based on resource availability, which means your system can dynamically adjust to optimize for speed and cost. So whether you’re running analytics or managing operations, you get peak performance without compromising on latency.

6. Embracing “Buy, Don’t Build” for Self-Service Data Management

When it comes to decentralized data management, building a custom solution from scratch can be a huge drain on time, resources, and specialized expertise. Instead, many organizations are shifting to a “buy, don’t build” approach, opting for readily available self-service data management software that brings together all these capabilities right out of the box. Solutions like Data Dynamics’ Zubin provide a robust, flexible platform for data governance, compliance, classification, and hybrid orchestration—all without the lengthy setup of custom development. By adopting a pre-built software, companies gain rapid deployment, with the ability to scale and adapt as their data needs evolve. Moreover, these platforms often come with built-in AI, advanced security features, and automation tools that keep data protected and accessible, letting teams focus on driving insights rather than managing infrastructure. The result? Faster time-to-value, easier compliance, and a streamlined path to a fully decentralized data environment that meets today’s standards.

So there you have it—a full breakdown of decentralized data management done right. Each piece of this strategy is essential for building a system that’s not only resilient and secure but also efficient and ready for the future. You’re setting up a structure that’s going to handle distributed data with ease, all while staying compliant and adaptable across different environments. It’s a winning strategy for any organization looking to scale.

To learn how Zubin can empower your organization to build smarter AI models through decentralized data management, visit www.datadynamicsinc.com or email us at solutions@datdyn.com.